Cloud services are pervasive. From individual users binge-watching Over-The-Top (OTT) video content to enterprises deploying Software-as-a-Service (SaaS), cloud services are how people and organizations consume content and applications. For years, large, centralized data center and cloud architectures have provided access and infrastructure for these services.

Now, a new generation of cloud-native applications is emerging in categories such as entertainment, retail, manufacturing, and automotive, which, in many cases, will be far more compute-intensive and latency-sensitive. Traditional centralized cloud architectures cannot meet the Quality of Experience (QoE) expectations for these applications and use cases and will require a more dynamic and distributed cloud model.

As a result, compute and storage cloud resources must move closer to the edge of the network, where content is created and consumed by both humans and machines, to meet the expected QoE. This new approach is referred to as Edge Cloud, where storage and compute is networked at the edge.

What is the Edge Cloud?

A to look at Edge Cloud is to look at an analogy from the physical world. When ecommerce companies like Amazon started out, they would ship all of their products from a warehouse near to their headquarters like Seattle. That worked well regionally, but as they expanded nationally, shipping times could not meet their customer’s expectations. To significantly reduce shipping times (analogous to network latency) they built warehouses all over the US, close to their customers and maintained stock (analogous to content) locally. Their local warehouses would be the physical equivalent of an edge data center. They have taken that concept to the max by building small warehouses in Manhattan to reduce shipping times to hours from days for a subset of their products.

So what applications are driving the need for Edge Cloud?

An increasing number of applications require real-time latency. The business drivers for these are not only consumer behavior, but enterprises improving their operations or monetizing improved customer experiences. Emerging use cases in these areas that are bandwidth and low-latency dependent include:

A few of these applications in greater detail that cover both consumer and enterprise applications:

1) Streaming Video/Content Delivery

As more and more live and recorded video content is now being streamed by consumers both at home and on mobile devices, there’s an increased need to move this content closer to users to improve performance and optimize long haul networking costs. The use of Content Delivery Networks (CDN) and local caching has been a traditional use case for edge storage, where popular content is cached in edge data centers, closest to where end-users consume their content.

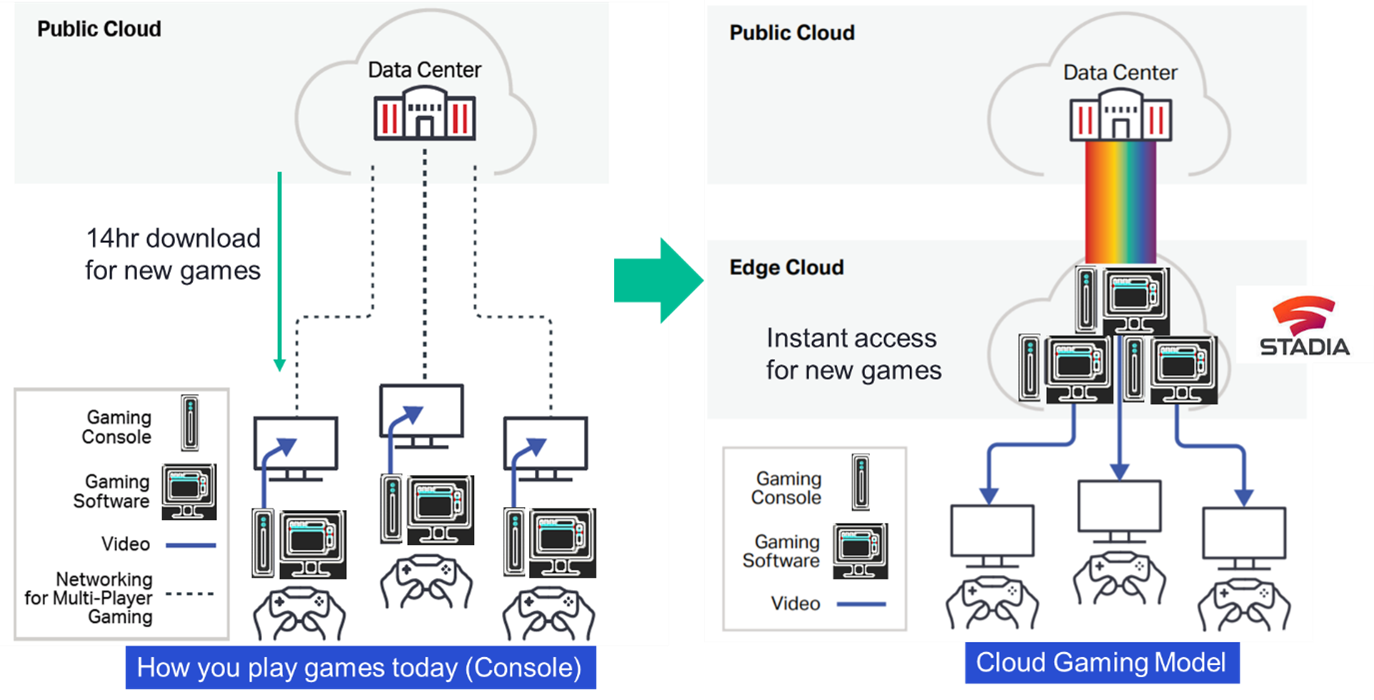

2) Cloud Gaming

In a cloud gaming scenario, gamers no longer require dedicated hardware, such as a traditional gaming console. They would continue to play their games using gaming controllers, but they would be connected to a gaming app on their device of choice (tablet, computer, or smartphone). In an edge computing model, gaming video is now streamed to the gamer’s device from a server in an edge compute data center. To match the performance of a local gaming set-up, both the low latency of these connections and the required bandwidth of gaming video, increasingly 4K, must be served from the cloud edge.

3) Cashier-less Retail Stores

This is a new approach where retailers (example: Amazon Go stores) embed cameras into store ceilings to capture images of shoppers purchasing goods. These images are analyzed through AI to determine what goods shoppers have purchased and eliminate the need for shoppers to go through a cashier at the check-out counter while their credit cards are billed directly for the goods they have purchased. Significant compute and storage resources are needed either in the retail stores or at the edge to perform this near real-time image processing to deliver a seamless customer experience.

4) Autonomous Driving

While it is expected that autonomous vehicles will process vast amounts of data from sensors within the car, for example, there’s a need to process a subset of this data with that of adjacent cars and street sensors in support of traffic congestion, road conditions and accident management. The latency to process and relay data back to the cars to take actions must be completed in milliseconds requiring an edge data center very close to the user to meet these application SLA requirements.

5) Industrial IoT/Smart Manufacturing

This is an initiative around Industry 4.0 where the manufacturing process is highly customizable and automated. Manufacturing lines are occupied by industrial robots whose functions are carefully controlled by local computing resources that use machine learning and AI to detect defects identified in the manufacturing process and adjust accordingly in near real time. To simplify connectivity on the plant floor, intelligent industrial robots will be connected via 5G (private or carrier managed) and will require low-latency, and often high-capacity, network performance to ensure low manufacturing defects and maximize safety of local workers.

These applications all share one of the following cloud service attributes driving the need for edge computing:

- Large compute and storage requirements for AI and machine learning type processing

- Low latency performance (under 10ms) where rapid user feedback is required